Introduction

The effective way to centralise servers and other active elements requires a change in the methods of cooling and protecting this equipment. The solution is – a data center.

PRODUCTS » DATA CENTERS » Introduction

Information

Modern data centers are significantly different from early versions of this method of installing servers and other equipment. The original data centers were established in the 1990s and relied on Internet connections as their foundation. Large data halls were built primarily as a space perfectly protected in terms of security, uninterrupted power supply and with adequate capacity of communication lines, mostly optical. The individual cabinets were then leased to users for their technical and Internet applications. These centers almost always had raised floors with high loading, beneath which all cabling and cooling systems were installed. Cooling was mostly centralized, so the entire room was air conditioned regardless of the distribution of the thermal load, and without the ability to effectively regulate cooling for each cabinet or the data hall.

With advancements in telecommunications, new protocols and an increase in the transmission line capacity, high-speed connections became available without the need to place the device directly onto the backbone connections. Additionally, another revolution took place on another front – processing power and storage capacity. Processor performance improved dramatically, multi-core processors were introduced, along with new operating systems. Hard drives and other storage media multiplied in capacity. Server operating systems began utilizing available resources for sharing multiple, simultaneously running applications, paving the way for virtualization – running multiple operating systems on a single physical computer.

Nowadays, many companies run their applications either on dedicated servers for specific purposes or by utilizing popular virtualization and cloud-hosting services. Both of these methods require high-density computing power. Because running businesses and institutions is a critical application, it requires power-fail safety, physical protection, and also controlled cooling. All these aspects are covered by the concept of a data center. Over time, the standard was set for the design and construction of data centers.

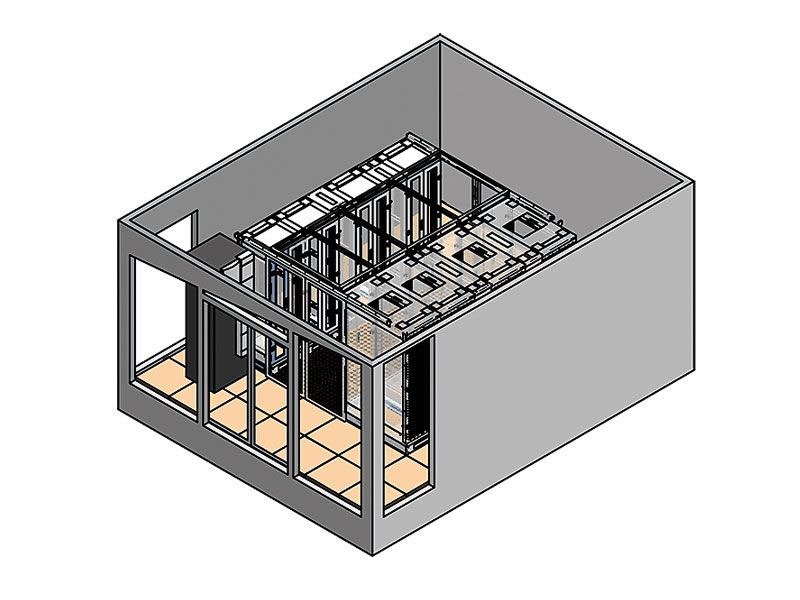

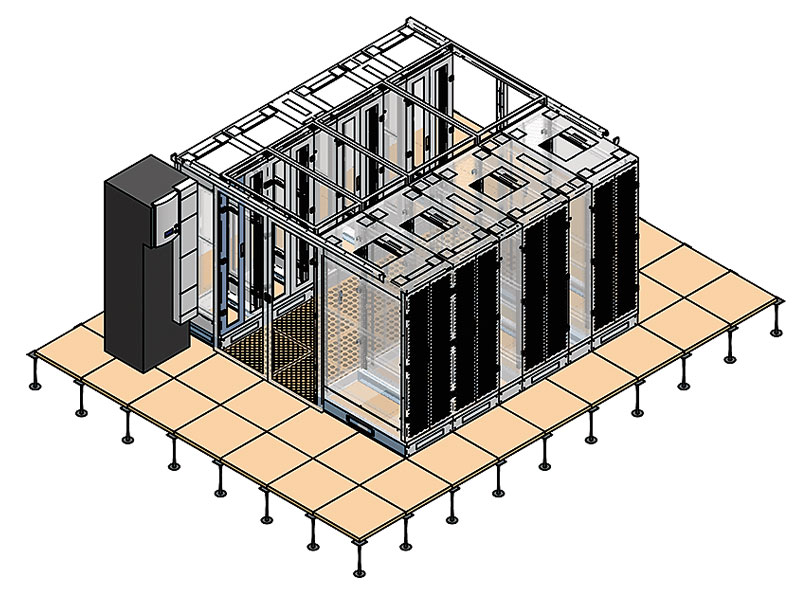

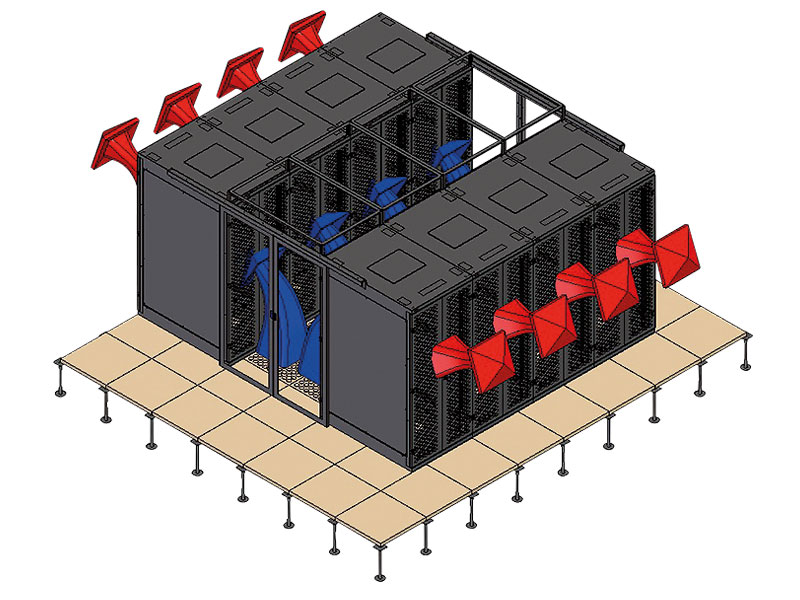

Cabinets are placed in groups, usually in the form of two rows spaced 1,200 mm apart (two standard raised floor tiles). The aisle between the cabinets is then roofed and closed at the ends by sliding doors. Larger data centers may feature dividing doors within these units, creating smaller sections. The main product of our company’s data center solution are data high-load data cabinets (from 1200 kg to 1800 kg), accompanied by other components, such as aisle roof of different types, self-closing sliding aisle door, blanking panels etc. Cabinets can be colocated (divided into multiple sections) with variety of front and rear door, locks, and other functionality.

In the cases where is not possible to use the raised floor (low room height, low permisisble floor loading and so on) we can offer an alternative, in the form of In-Row cooling units with top media inlet and condensate pump. This advanced solution offers extra large installed cooling capacity in a small footprint.

Our specialists will be glad to help you to choose the optimum solution for your needs. Selecting the right type of cabinet and accessories, you can save significant money spent on the operation of your equipment.

This critical stage of building data centers has an indefinite solution. It depends on the cabinet arrangement, distribution of heat load and its size, the choice of thermal scheme (such as hot/cold aisle or zonal distribution of cold) and many other aspects. When selecting the most suitable arrangement, it is necessary to take into account the type of cooling system (under-floor cooling, In-Row cooling units …) and with regard to the coolant used, also selecting the outer part of the system. The choice of the cooling medium should consider outdoor climatic conditions, the distance between the data center and external units, and the elevation difference between them. Depending on these conditions, we can choose water cooling with an appropriate addition of antifreeze, or a system operating with liquid refrigerant gas.

To ensure safety and redundancy for service operations, the complete system must be properly designed, both inside the data center and in terms of radiators or condensers. Furthermore, it is necessary to consider humidity control. Humidity levels below 30 % pose a risk of damage to installed equipment due to static electricity surges, while high humidity can lead to condensation.

In our portfolio, you can find the cooling systems of leading manufacturers with extensive experience in data centers and telecommunication equipment cooling. Thanks to our close cooperation and support from their development teams, we can provide proven and guaranteed solutions. Designing functional, reliable, financially and operationally economical cooling systems for data centers is not an easy matter and specialists, who will recommend the optimum solution in terms of investment and operating costs, are fully available.